Photogrammetry is the process of creating 3D models and measurements from 2D photographs.

How it works:

Image Capture: Multiple overlapping photographs of an object or scene are taken from different angles. The more photos and the better the overlap, the more accurate the final model will be.

Image Processing: Specialized software analyzes the photographs, identifying common points and features in the images.

3D Reconstruction: By analyzing the relative positions of these common points in the different images, the software calculates the 3D position of these points in space, effectively reconstructing the shape and dimensions of the object or scene.

Types of Photogrammetry:

Aerial Photogrammetry: Uses images taken from aircraft or drones to create maps, terrain models, and other geospatial data.

Close-Range Photogrammetry: Uses images taken from closer distances, often with handheld cameras, to create 3D models of smaller objects, buildings, or even archaeological artifacts.

Applications:

Archaeology: Documenting and preserving historical sites and artifacts.

Surveying and Mapping: Creating accurate maps and terrain models.

Construction and Engineering: Monitoring construction progress, inspecting structures, and creating as-built models.

Cultural Heritage: Creating virtual tours of museums and historical sites.

Gaming and Entertainment: Creating realistic 3D models for video games and movies.

Forensics: Documenting crime scenes and accident sites.

Advantages:

Relatively inexpensive: Compared to other 3D scanning methods, photogrammetry can be more affordable, especially for larger objects or areas.

Captures texture and color: Photogrammetry can capture detailed surface texture and color information, resulting in realistic models.

Non-contact method: It doesn’t require physical contact with the object, making it suitable for delicate or inaccessible objects.

Limitations:

Requires good lighting and image quality: Poor lighting or blurry images can affect the accuracy of the results.

Can be computationally intensive: Processing large numbers of images can require significant computing power.

Challenging for objects with reflective or transparent surfaces: These surfaces can cause issues with feature detection.

TOOLS

Ani3D: https://videoconverter.wondershare.com/3d-video-converter.html

A 3D video converter that can convert 2D videos to 3D with one click. It also supports VR 3D video conversion.

Owl3D: https://www.owl3d.com/

An AI-powered 2D to 3D video converter that can convert photos and videos on your phone. It also has a batch processing feature.

FlexClip: https://www.flexclip.com/

An online AI 2D image to 3D animation converter that allows you to choose the animation style, adjust intensity and duration, and more.

Immersity AI: https://www.immersity.ai/

A tool that allows you to convert images to 3D motion videos. You can preview different motion styles, adjust the style of your image, and fine-tune the depth.

Other ways to create 3D models

You can use software to reconstruct an object as a 3D model by taking enough images of the object from different angles.

You can use videogrammetry, which uses video footage to create 3D models.’

RADIANCE FIELDS & NeRF

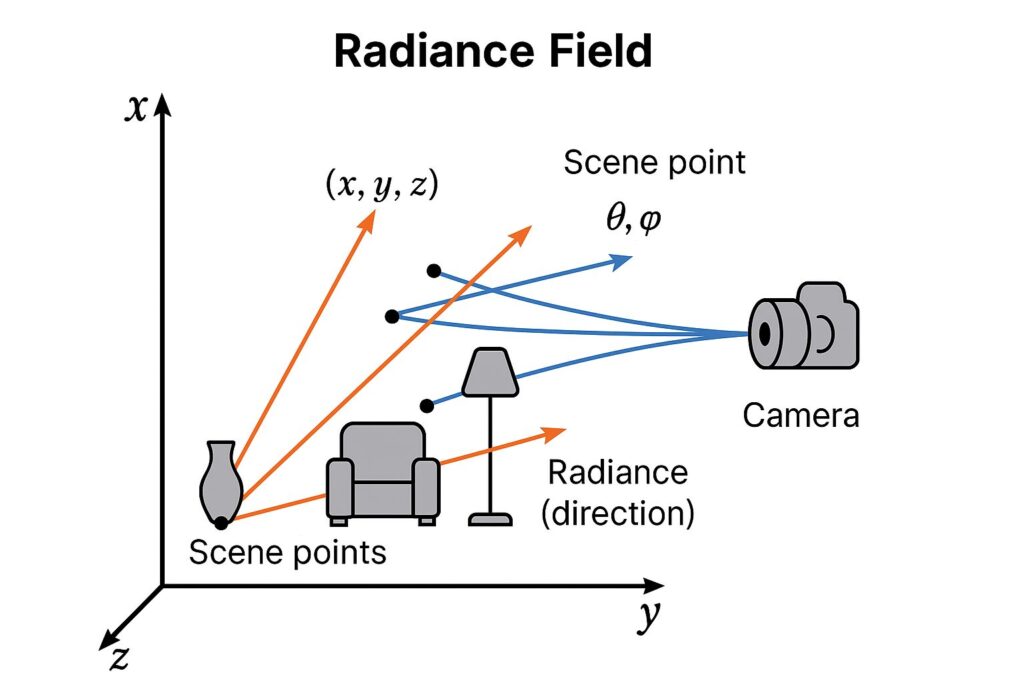

What is a Radiance Field?

A radiance field is a function that describes how light radiates from points in 3D space in all directions. It maps 3D coordinates (x, y, z) and a viewing direction (θ, φ) to color and density values. In neural rendering, it enables the synthesis of realistic views from new perspectives.

How NeRF Works

- Input = Photos of a Scene: multiple photos of a scene taken from different angles.

- Each Photo = Light Rays: each pixel represents a ray of light from a 3D point.

- NeRF Learns a Function: predicts color and density for any 3D point and view direction.

- Ray Marching: samples multiple points along each ray and accumulates color.

- Output = Novel Views: can generate images from new viewpoints.

- Tools for No-Code NeRF

Luma AI (iPhone only): https://lumalabs.ai/

Nerfstudio: https://docs.nerf.studio/

Polycam: https://poly.cam/

GAUSSIAN SPLATTING

Gaussian splatting creates a 3D representation of a scene from a set of images or videos by converting them into a sparse 3D point cloud, then representing each point as a 3D Gaussian (a blurry cloud of points). These Gaussians are then optimized to match the input images through a process called gradient descent, resulting in a 3D model where each Gaussian represents a piece of the scene’s structure and appearance.

Input:

A set of images or videos of a static scene are provided, along with their corresponding camera positions.

- Structure from Motion (SfM):

SfM techniques are used to estimate a sparse 3D point cloud from the input images, essentially creating a rough representation of the scene’s geometry. - Gaussian Conversion:

Each point in the sparse point cloud is then converted into a 3D Gaussian. These Gaussians are defined by their position (mean), covariance matrix (which controls the size and shape of the Gaussian), and opacity. - Optimization:

A training process, often using stochastic gradient descent, is used to optimize the parameters of the Gaussians (position, covariance, opacity, and color) to match the input images. This process involves rendering the scene from the same camera positions as the input images and comparing the rendered images with the original input images. - Rendering:

At rendering time, a process called Gaussian rasterization transforms the Gaussians into pixels on the screen, creating a photorealistic 3D view of the scene.

In simpler terms: Imagine taking many photos of an object from different angles. Gaussian splatting takes those photos, uses them to create a 3D model of the object, and then represents that model with a collection of blurry clouds (Gaussians). These clouds are then adjusted to match the original photos, resulting in a realistic 3D representation.

GAUSSIAN SPLATTING TOOLS

Cloud-based services:

Luma AI: Known for its powerful mobile and web platform, Luma AI allows you to process NeRF and Gaussian Splats, potentially using your phone or computer.

Polycam: Polycam offers both a mobile app and a web platform for creating Gaussian splats. They also provide tools for editing and exporting your splats.

Scaniverse: This app is specifically designed for creating splats directly on your device, offering advantages in terms of privacy and speed.

Desktop Software:

Postshot: Postshot allows you to generate and train 3D Gaussian Splatting models on your own computer, using your computer’s graphics card.

NeRF Studio: NeRF Studio is another option for creating Gaussian splats on your own computer.

Mobile Apps:

Polycam: As mentioned, Polycam also has a mobile app for creating splats.

Scaniverse: Scaniverse is specifically designed to process splats on your device, offering advantages in terms of privacy and speed.

Other tools:

COLMAP: This tool is used for structure from motion (SfM), which can be used to create a sparse point cloud from images, which can then be used to create a Gaussian splat.

Blender: Blender has a plugin for working with Gaussian splats.

Unity: Unity also has a plugin for working with Gaussian splats.

Unreal Engine: Unreal Engine has a 3D Gaussian plugin for working with Gaussian splats

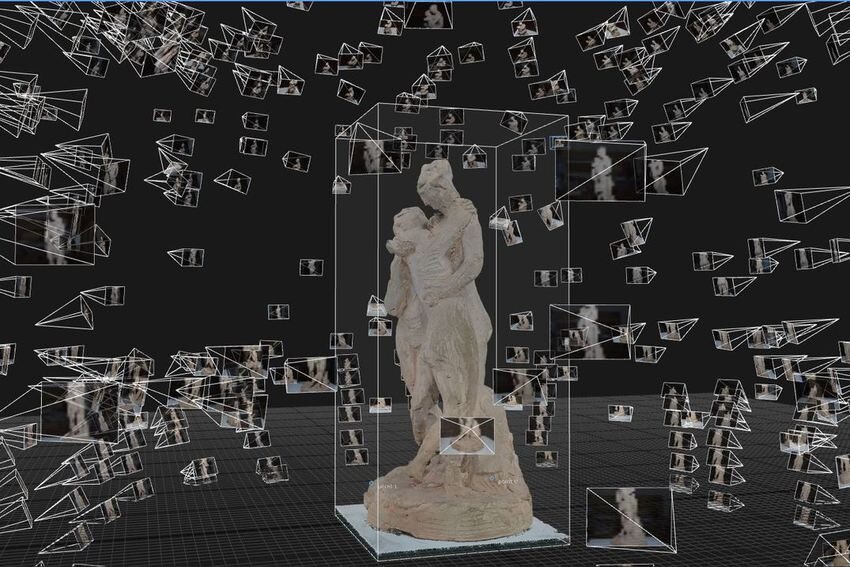

EXAMPLES

https://www.factum-arte.com/pag/1345/PHOTOGRAMMETRY

‘Point cloud’ of the Three Graces by Antonio Canova © Factum Foundation

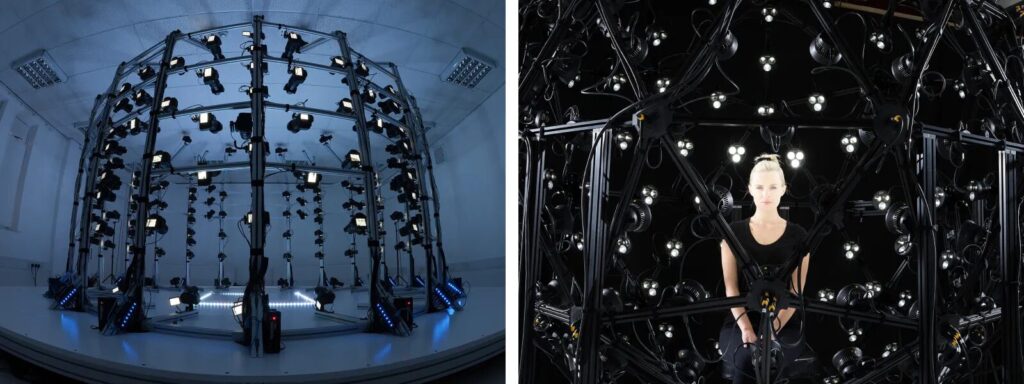

https://www.pix-pro.com/blog/human-photogrammetry

TOOLS

Kiri Engine App:

https://www.kiriengine.app/

Mac / iOS: https://www.photocatch.app/

Android:

https://peterfalkingham.com/2023/11/24/realityscan-photogrammetry-on-android/

EPIC GAMES iOS/Android FREE

Reality Scan: https://www.unrealengine.com/en-US/realityscan

3D GAUSSIAN SPLATTING

ADOBE SUBSTANCE 3D SAMPLER

MAIN LINK: https://www.adobe.com/learn/substance-3d

YoutTube: https://www.youtube.com/@Substance3D

You CAN export a model as GLB directly from Substance 3D Sampler.

ASSIGNMENT

11 • Human Spatial Photography

11A – Using any camera (including mobile phones and tablets) and Substance 3D, you will create a distinct 3D model of a human being (it can be your roommate, a family member, and actor, a friend, etc.) Document the entire process (behind the scenes, resulting products). You must capture at lest 20 photos to generate the spatial representation. Screen grab your results and share them as MP4 movies. Share as GLB files to be embedded in the website.

11B – Using any camera (including mobile phones and tablets) and Substance 3D, you will create a distinct 3D model of your room, living room, shared space. Use an enclosed space, with a ceiling and 4 walls. Document the entire process (behind the scenes, resulting products). You must capture at lest 20 photos to generate the spatial representation. Screen grab your results and share them as MP4 movies. Share as GLB files to be embedded in the website.